We recently ran into an issue at work where a large number of primary key and unique key constraints had not been named, but instead, allowed to have system-generated names (which appear as SYS_Cxxxxxx in the data dictionary). I decided to develop a package to dynamically rename these particular constraints, for a given table or en masse for all tables having such unnamed key constraints.

The impetus for doing this is that we support many clients with our database-centric application. Should a customer experience an error caused by a restricted operation on a primary or unique key (such as a DUP_VAL_ON_INDEX exception), it’d be nice to have the actual name of the key constraint that was violated. The constraint and index names should really be consistent across all customers. Additionally, system-generated names change whenever a schema in exported and imported. Naming the constraints keeps the names properly consistent, and falls into the proper realm of adherence to naming conventions.

Some premises/observations/caveats of note:

- We desire to change system-generated key constraints to a standard form, such as pk_

(up to 30 chars) and have the index name match as well. - Some table names are too long to follow the simple PK_

pattern. The key name is therefore truncated to 30 chars. If that results in a name collision, chop it to 29 and add a digit. If there is still a name collision, increment the trailing digit until one is available (up to 9 tries). - All currently developer-named key constraints have index names that match.

- Some key constraints with system-generated names may have underlying indexes that are developer-designed. This occurs when Oracle decides an existing index is suitable for supporting the constraint. These are not modified.

- While a table can have at most one (1) primary key, it can have one or more unique keys.

- For regular tables, we can simply rename the constraint and underlying index.

- We can't rename the underlying index for Global Temporary Tables (GTT's); instead, disable the constraint and drop the index, enable and recreate the index.

- I’m only doing this for the current schema.

That said, I began by prototyping a query to locate system-generated primary key constraints named the same as the underlying system-generated index names:

SELECT uc.table_name, uc.constraint_name, uc.index_name

FROM user_constraints uc

WHERE uc.constraint_type IN ('P', 'U')

AND uc.constraint_name LIKE 'SYS_C%'

AND uc.index_name LIKE 'SYS_C%'

ORDER BY uc.table_name;

From my list of caveats above, I also need to add whether this is a global temp table. So I add a scalar subquery to the Select clause:

SELECT uc.table_name, uc.constraint_name, uc.index_name

,(SELECT ut.TEMPORARY

FROM user_tables ut

WHERE ut.table_name = uc.table_name) TEMPORARY

FROM user_constraints uc

WHERE uc.constraint_type IN ('P', 'U')

AND uc.constraint_name LIKE 'SYS_C%'

AND uc.index_name LIKE 'SYS_C%'

ORDER BY uc.table_name;

I need to know whether the new name already exists. If it does, I have to implement the incremental numbering suffix scheme. Since I may be using the query in more than one place, I should encapsulate it in a function for reuse.

-- does given constraint exist? -- should only be one of the given name FUNCTION cnt_constraint( p_constraint_name IN user_constraints.constraint_name%TYPE ) RETURN pls_integer IS v_cnt_exist pls_integer; BEGIN SELECT COUNT( * ) cnt INTO v_cnt_exist FROM user_constraints uc WHERE uc.constraint_name = p_constraint_name; RETURN (v_cnt_exist); END cnt_constraint;

This will return either a 1 or 0 – does or does not exist.

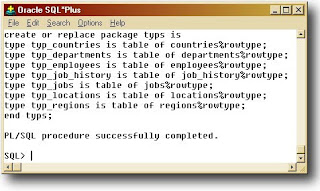

I’m building a package, so the interface (in the package header) is a good place to begin.

-- Optional tablename or NULL for all PROCEDURE rename_key(p_tablename IN user_constraints.table_name%TYPE);

Here is my assembled program, with comments:

PROCEDURE rename_key(

p_tablename IN user_constraints.table_name%TYPE)

IS

v_stmt VARCHAR2(4000); -- the dynamic statement

v_new_key_name VARCHAR2(30); -- new constraint name created

v_cnt PLS_INTEGER := 0;

v_error PLS_INTEGER := 0;

v_loop_cnt PLS_INTEGER;

-- normalize input name

v_table_name user_constraints.table_name%TYPE := UPPER(p_tablename);

BEGIN

-- a cursor FOR loop is adequate for the task at hand

-- this will process all system-named primary/unique keys/indexes found

-- for the given table or all tables if null

FOR rec IN (SELECT uc.table_name, uc.constraint_type, uc.constraint_name

,uc.index_name

,(SELECT ut.TEMPORARY

FROM user_tables ut

WHERE ut.table_name = uc.table_name) TEMPORARY

FROM user_constraints uc

WHERE uc.constraint_type IN('P', 'U')

AND uc.constraint_name LIKE 'SYS_C%'

AND uc.index_name LIKE 'SYS_C%'

AND (v_table_name IS NULL OR uc.table_name = v_table_name)

ORDER BY uc.table_name) LOOP

BEGIN

-- build the constraint name according to the naming convention

-- PK_ or UK_, up to 30 chars (the limit)

v_new_key_name := SUBSTR(rec.constraint_type || 'K_' || rec.table_name

,1

,30);

v_loop_cnt := 0;

-- does the new name already exist?

-- if so, chop the last character and add a digit

-- up to 9 tries (should be enough)

WHILE(cnt_constraint(v_new_key_name) > 0 AND v_loop_cnt <= 9) LOOP

DBMS_OUTPUT.put_line(v_new_key_name || ' already exists; adjusting...');

v_loop_cnt := v_loop_cnt + 1;

v_new_key_name := SUBSTR(rec.constraint_type || 'K_' || rec.table_name

,1

,29) ||

TO_CHAR(v_loop_cnt, 'FM9');

DBMS_OUTPUT.put_line('Trying ' || v_new_key_name || '...');

END LOOP;

-- assemble the alter table-rename constraint statement

v_stmt := 'alter table ' ||

rec.table_name ||

' rename constraint ' ||

rec.constraint_name ||

' to ' ||

v_new_key_name;

-- always a good idea to see what you've built

DBMS_OUTPUT.put_line(v_stmt || ';');

EXECUTE IMMEDIATE v_stmt; -- make it so!

-- special handling for GTT's

IF (rec.TEMPORARY = 'N') THEN

-- regular tables don't need special handling

-- assemble the alter index-rename statement

v_stmt := 'alter index ' ||

rec.index_name ||

' rename to ' ||

v_new_key_name;

DBMS_OUTPUT.put_line(v_stmt || ';');

EXECUTE IMMEDIATE v_stmt; -- rename index

ELSE -- can't rename index on global temp table

-- first, disable newly renamed constraint and whack index

v_stmt := 'alter table ' ||

rec.table_name ||

' disable constraint ' || v_new_key_name ||

' drop index';

DBMS_OUTPUT.put_line(v_stmt || ';');

EXECUTE IMMEDIATE v_stmt;

-- re-enable newly renamed constraint, building a new index

v_stmt := 'alter table ' ||

rec.table_name ||

' enable constraint ' || v_new_key_name ||

' using index';

DBMS_OUTPUT.put_line(v_stmt || ';');

EXECUTE IMMEDIATE v_stmt;

END IF;

v_cnt := v_cnt + 1;

EXCEPTION

-- handling errors on each loop iteration allows me

-- to continue renaming other constraints even if one fails

WHEN OTHERS THEN

DBMS_OUTPUT.put_line(SQLERRM);

v_error := v_error + 1;

END;

END LOOP;

-- housekeeping messages

DBMS_OUTPUT.put_line(v_cnt || ' Key constraints and their indexes adjusted.');

DBMS_OUTPUT.put_line(v_error || ' errors occurred.');

END rename_key;

/

-- now let's try it out

BEGIN

rename_key(null);

END;

/

Here is my output:

alter table UNK_GTT rename constraint SYS_C004797 to PK_UNK_GTT;

alter table UNK_GTT disable constraint PK_UNK_GTT drop index;

alter table UNK_GTT enable constraint PK_UNK_GTT using index;

alter table UNK_GTT rename constraint SYS_C004798 to UK_UNK_GTT;

alter table UNK_GTT disable constraint UK_UNK_GTT drop index;

alter table UNK_GTT enable constraint UK_UNK_GTT using index;

PK_UNK_PKEY already exists; adjusting...

Trying PK_UNK_PKEY1...

alter table UNK_PKEY rename constraint SYS_C004799 to PK_UNK_PKEY1;

alter index SYS_C004799 rename to PK_UNK_PKEY1;

alter table UNK_UKEY rename constraint SYS_C004800 to UK_UNK_UKEY;

alter index SYS_C004800 rename to UK_UNK_UKEY;

4 Key constraints and their indexes adjusted.

0 errors occurred.

Running it again, I should see no additional work done.

0 Key constraints and their indexes adjusted.

0 errors occurred.

I should also verify no system-named key constraints or indexes now exist.

SELECT uc.table_name, uc.constraint_name, uc.constraint_type

FROM user_constraints uc

WHERE uc.constraint_type IN('P', 'U') AND uc.constraint_name LIKE 'SYS_C%';

SELECT ui.table_name, ui.index_name

FROM user_indexes ui

WHERE ui.index_name LIKE 'SYS_C%';

If there were, it’s probably because one or the other key constraint and index weren’t system-named.

Here is my test case setup. I deliberately did not name the key constraints, and I needed a key constraint name that would conflict with a to-be generated one.

DROP TABLE unk_gtt CASCADE CONSTRAINTS; CREATE GLOBAL TEMPORARY TABLE unk_gtt ( ID INTEGER, str VARCHAR2(100), id2 INTEGER ) ON COMMIT DELETE ROWS; ALTER TABLE unk_gtt ADD ( PRIMARY KEY (ID), UNIQUE (id2)); DROP TABLE unk_pkey CASCADE CONSTRAINTS; CREATE TABLE unk_pkey ( ID INTEGER, str VARCHAR2(100) ); ALTER TABLE unk_pkey ADD ( PRIMARY KEY (ID) ); DROP TABLE unk_ukey CASCADE CONSTRAINTS; CREATE TABLE unk_ukey ( ID INTEGER, str VARCHAR2(100) ); ALTER TABLE unk_ukey ADD ( UNIQUE (ID) ); DROP TABLE unk_overlap; CREATE TABLE unk_overlap ( ID INTEGER, str VARCHAR2(100) ); ALTER TABLE unk_overlap ADD CONSTRAINT pk_unk_pkey PRIMARY KEY (ID); ALTER TABLE unk_overlap ADD ( FOREIGN KEY (id) REFERENCES unk_ukey (id));

Note the foreign key on unk_overlap shows that the pkey/ukey constraints can be renamed even if a foreign key exists on them. Changing the name of the key constraint doesn't impact the fkey; likewise, the fkey in place doesn't prevent you from renaming the key constraint.